Azure- How to move/transfer files from Azure Files Shares to Azure Blob Storage

Problem statement:

Recently we had a requirement to transfer data from Onpremise and different vendor systems to Organization internal for consumption. So the biggest question how do I transfer big set of data? What is the best and Easiest way to transfer data and Share internally?

Resolution Path:

One of the biggest capability which Microsoft offers for heavy data transfer is Azure file shares, Azure Files offers fully managed file shares in the cloud that are accessible via the industry standard Server Message Block (SMB) protocol, Network File System (NFS) protocol, and Azure Files REST API. Azure file shares can be mounted concurrently by cloud or on-premises deployments. SMB Azure file shares are accessible from Windows, Linux, and macOS clients. NFS Azure file shares are accessible from Linux clients. Additionally, SMB Azure file shares can be cached on Windows servers with Azure File Sync for fast access near where the data is being used.

Part 1

So the first part of the problem statement is copying files from Onpremise to Azure Cloud. we can attach Azure File Share to any On premise or Vendor VM and copy the data from source to Azure File Share as Destination. Make sure it has SMB port has to be open. As it gives automated script which can run.

Part2

Next part of our problem statement is how to move files from Azure Files Shares to individual teams. As most of the project teams use Azure Blob storage/containers. So in order to move files from Azure File Shares to Azure blob there is no native capability which Azure Offers. :(

I thought of using some of the Azure Native services such as

- Azure Data factory ( as for every pipeline execution you need to pay cents)

- Azure LogicApps ( In order to run you may need either service account or some functional account).

However I want to have some solution considering Cost, Security and Elasticity.

So first piece of using Azure File Share mount and use Python script to move files to Azure Blob storage.

So in order to deal with this I came with simple python script which can transfer files from Files Shares to Blob containers.

Part3

Steps to achieve, for this I have created a simple Python script we just need to pass below parameters.

- Azure Blob storage Name + Container Name

- Azure File Share name

- Azure Blob storage account key

- Azure File Share Account key

- Here is the first step when someone has uploaded a new file on Azure File Share.

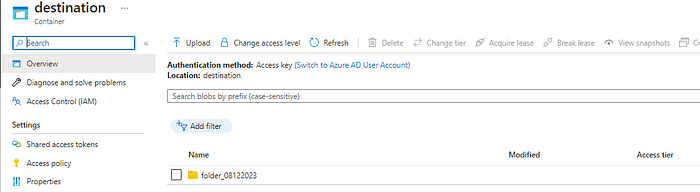

2. You can see my destination Blob container is empty right now(no file exists).

3. Now the execution part, before you execute the script make sure you run below script as it will install python library and its a one time activity.

pip install azure-storage-file-share azure-storage-blob

4. Now to move files execute

python <filename.py>

After execution you can see my source Azure file share container is empty.

Here is the full script.

from azure.storage.fileshare import ShareServiceClient

from azure.storage.blob import BlobServiceClient, BlobType

from datetime import datetime

# Define your Azure Storage account information

file_account_name = 'iottransferstorage'

file_account_key = 'Replace storage account key or fetch from Azure keyvault'

file_share_name = 'source'

blob_account_name = 'iottransferstorage1'

blob_account_key = 'Replace storage account key or fetch from Azure keyvault'

container_name = 'destination'

# Construct the account URL for the file share

file_account_url = f"https://{file_account_name}.file.core.windows.net"

# Initialize the Azure File Share client

file_service_client = ShareServiceClient(account_url=file_account_url, credential=file_account_key)

# Get a reference to the source file share

file_share_client = file_service_client.get_share_client(file_share_name)

# Get a reference to the root directory of the source file share

root_directory_client = file_share_client.get_directory_client()

# Function to recursively list files and directories

def list_files_and_directories(directory_client):

for item in directory_client.list_directories_and_files():

if item.is_directory:

subdirectory_client = directory_client.get_subdirectory_client(item.name)

yield from list_files_and_directories(subdirectory_client) # Recurse into subdirectory

else:

yield item # Yield file

# Initialize the Azure Blob Storage client

blob_account_url = f"https://{blob_account_name}.blob.core.windows.net"

blob_service_client = BlobServiceClient(account_url=blob_account_url, credential=blob_account_key)

# Get the current date in a suitable format

current_date = datetime.utcnow().strftime("%m%d%Y")

# Get a reference to the destination subfolder in the Blob Storage container

subfolder_name = f"folder_{current_date}"

blob_subfolder_client = blob_service_client.get_blob_client(container=container_name, blob=subfolder_name)

# Iterate through files and directories in the file share

for file_or_directory in list_files_and_directories(root_directory_client):

if file_or_directory.is_directory:

continue # Skip directories

# Get a reference to the source file

source_file_client = root_directory_client.get_file_client(file_or_directory.name)

# Download the file content

file_contents = source_file_client.download_file()

# Upload the file content to the subfolder in Blob Storage

blob_file_name = file_or_directory.name.replace('/', '_')

blob_file_path = f"{subfolder_name}/{blob_file_name}"

blob_file_client = blob_service_client.get_blob_client(container=container_name, blob=blob_file_path)

blob_file_client.upload_blob(data=file_contents.readall(), overwrite=True)

# Delete the file from the Azure File Share

source_file_client.delete_file()

print("File migration and deletion completed.")This script can be automated from below options

- Using Azure VM as a task scheduled,

- Using Azure function to run python script

- Using Azure Automation runbook